http://psmweb.it/kjjfdjws/deahorb

Vishwas Gupta

7/21/2013 2:21:11 PM

The Cognos Stuff

Cognos and DW Blog

Sunday, July 21, 2013

Sunday, April 8, 2012

Snowflakes, Outriggers, and Bridges -- By Margy Ross ( Kimball Group )

Nice article by Margy, sharing with you all

Students often blur the concepts of snowflakes, outriggers, and bridges. In this Design Tip, we’ll try to reduce the confusion surrounding these embellishments to the standard dimensional model.

When a dimension table is snowflaked, the redundant many-to-one attributes are removed into separate dimension tables. For example, instead of collapsing hierarchical rollups such as brand and category into columns of a product dimension table, the attributes are stored in separate brand and category tables which are then linked to the product table. With snowflakes, the dimension tables are normalized to third normal form. A standard dimensional model often has 10 to 20 denormalized dimension tables surrounding the fact table in a single layer halo; this exact same data might easily be represented by 100 or more linked dimension tables in a snowflake schema.We generally encourage you to handle many-to-one hierarchical relationships in a single dimension table rather than snowflaking. Snowflakes may appear optimal to an experienced OLTP data modeler, but they’re suboptimal for DW/BI query performance. The linked snowflaked tables create complexity and confusion for users directly exposed to the table structures; even if users are buffered from the tables, snowflaking increases complexity for the optimizer which must link hundreds of tables together to resolve queries. Snowflakes also put burden on the ETL system to manage the keys linking the normalized tables which can become grossly complex when the linked hierarchical relationships are subject to change. While snowflaking may save some space by replacing repeated text strings with codes, the savings are negligible, especially in light of the price paid for the extra ETL burden and query complexity.

Outriggers are similar to snowflakes in that they’re used for many-to-one relationships, however they’re more limited. Outriggers are dimension tables joined to other dimension tables, but they’re just one more layer removed from the fact table, rather than being fully normalized snowflakes.Outriggers are most frequently used when one standard dimension table is referenced in another dimension, such as a hire date attribute in the employee dimension table. If the users want to sliceand-dice the hire date by non-standard calendar attributes, such as the fiscal year, then a date dimension table (with unique column labels such as Hire Date Fiscal Year) could serve as an outrigger to the employee dimension table joined on a date key. Like many things in life, outriggers are acceptable in moderation, but they should be viewed as the exception rather than the rule. If outriggers are rampant in your dimensional model, it’s time to return to the drawing board given the potentially negative impact on ease-of-use and query performance.

Bridge tables are used in two more complicated scenarios. The first is where a many-to-many relationship can’t be resolved in the fact table itself (where M:M relationships are normally handled) because a single fact measurement is associated with multiple occurrences of a dimension, such as multiple customers associated with a single bank account balance. Placing a customer dimension key in the fact table would require the unnatural and unreasonable divvying of the balance amongst multiple customers, so a bridge table with dual keys to capture the many-to-many relationship between customers and accounts is used in conjunction with the measurement fact table. Bridge tables are also used to represent a ragged or variable depth hierarchical relationship which cannot be reasonably forced into a simpler fixed depth hierarchy of many-to-one attributes in a dimension table.In these isolated situations, the bridge table comes to the rescue, albeit at a price. Sometimes bridges are used to capture the complete data relationships, but pseudo compromises, such asincluding the primary account holder or top rollup level as dimension attributes, help avoid paying the toll for navigating the bridge on every query.

When a dimension table is snowflaked, the redundant many-to-one attributes are removed into separate dimension tables. For example, instead of collapsing hierarchical rollups such as brand and category into columns of a product dimension table, the attributes are stored in separate brand and category tables which are then linked to the product table. With snowflakes, the dimension tables are normalized to third normal form. A standard dimensional model often has 10 to 20 denormalized dimension tables surrounding the fact table in a single layer halo; this exact same data might easily be represented by 100 or more linked dimension tables in a snowflake schema.We generally encourage you to handle many-to-one hierarchical relationships in a single dimension table rather than snowflaking. Snowflakes may appear optimal to an experienced OLTP data modeler, but they’re suboptimal for DW/BI query performance. The linked snowflaked tables create complexity and confusion for users directly exposed to the table structures; even if users are buffered from the tables, snowflaking increases complexity for the optimizer which must link hundreds of tables together to resolve queries. Snowflakes also put burden on the ETL system to manage the keys linking the normalized tables which can become grossly complex when the linked hierarchical relationships are subject to change. While snowflaking may save some space by replacing repeated text strings with codes, the savings are negligible, especially in light of the price paid for the extra ETL burden and query complexity.

Outriggers are similar to snowflakes in that they’re used for many-to-one relationships, however they’re more limited. Outriggers are dimension tables joined to other dimension tables, but they’re just one more layer removed from the fact table, rather than being fully normalized snowflakes.Outriggers are most frequently used when one standard dimension table is referenced in another dimension, such as a hire date attribute in the employee dimension table. If the users want to sliceand-dice the hire date by non-standard calendar attributes, such as the fiscal year, then a date dimension table (with unique column labels such as Hire Date Fiscal Year) could serve as an outrigger to the employee dimension table joined on a date key. Like many things in life, outriggers are acceptable in moderation, but they should be viewed as the exception rather than the rule. If outriggers are rampant in your dimensional model, it’s time to return to the drawing board given the potentially negative impact on ease-of-use and query performance.

Bridge tables are used in two more complicated scenarios. The first is where a many-to-many relationship can’t be resolved in the fact table itself (where M:M relationships are normally handled) because a single fact measurement is associated with multiple occurrences of a dimension, such as multiple customers associated with a single bank account balance. Placing a customer dimension key in the fact table would require the unnatural and unreasonable divvying of the balance amongst multiple customers, so a bridge table with dual keys to capture the many-to-many relationship between customers and accounts is used in conjunction with the measurement fact table. Bridge tables are also used to represent a ragged or variable depth hierarchical relationship which cannot be reasonably forced into a simpler fixed depth hierarchy of many-to-one attributes in a dimension table.In these isolated situations, the bridge table comes to the rescue, albeit at a price. Sometimes bridges are used to capture the complete data relationships, but pseudo compromises, such asincluding the primary account holder or top rollup level as dimension attributes, help avoid paying the toll for navigating the bridge on every query.

Thank You

Vishwas Gupta

Vishwas Gupta

Saturday, April 7, 2012

List and Crossttab not matching though using same query

Crosstab is more prone to picking data from cache than a list, so sometime when data is refreshed in database while you running report, your list may show the latest data but crosstab keep showing the old data. It becomes difficult to get the right report as even if you run report multple times crosstab would keep showing the old data, in this case you have to either log off and log in or you wait for few minutes.

Bursting Cognos Report to a file system

1) Go to the dispatcher -- ( See the picture below for reference )

2) Click on the dispatcher link to see the content manager service.

3) Go to the settings tab and click on edit on advance setting line -

4) Create following 2 variables and provide the path where you want to get the files bursted.

5) Save it and go to cognos configuration to do the following setting.

6) Run the report that you have to burst with the following settings.

7) You will get the output in D:/Report folder, now you want that bursted reports should get proper names or they should get names according to there bursted key.

8) Create a notepad file giving it a name "burstKey.bat" and paste the following contents and save it in D:\Reports folder.

@echo off

Rem this batch file is launched with a working directory of set dest=D:/Reports

echo "BurstKey Script" >> %dest%/batch.txt

echo Batch File Log >> %dest%/batch.txt

rem Set the values for the PDF file and XML

rem to environment variables

rem the reason for this is the scripting language

rem cannot read variables as parameters

set parameter_pdf=%1%

set parameter_xml=%2%

rem Logs the variables to a batch log file

echo values set >> %dest%/batch.txt

echo Parameter_pdf: %parameter_pdf% >> %dest%/batch.txt

echo Parameter_xml: %parameter_xml% >> %dest%/batch.txt

rem Calls the script file

call %dest%/burstKeyRename.vbs

echo Completed >> %dest%/batch.txt

9) create a file "burstKeyRename.vbs" and paste the following contenets in there and save it in D:\Reports folder.

'

' Last updated : Sept 24, 2007'

Set objFSO = CreateObject("Scripting.FileSystemObject")

Set xmlDoc = CreateObject("MSXML.DOMDocument")

Set WshShell = WScript.CreateObject("WScript.Shell")

Set WshSysEnv = WshShell.Environment("process")

' Values for Environment can be "user", "system", "volitile" and "process

' The value "process" is the only valid one for this type of operation.

Set WshSysEnv = WshShell.Environment("process")

' To check the value for process environment variable

' this can be tested at the command prompt.

' Uncomment the next line and run this from command line

' WScript.Echo WshSysEnv("parameter_xml")

' Initializing Variables

xFile = WshSysEnv("PARAMETER_XML") 'Name of the XML file

sFile = WshSysEnv("PARAMETER_PDF") 'Name of the PDF file

' ***** Start override section for testing outside Cognos environment *****

'

' Set the following if-then condition to "true" for local testing using files that

' have already been burst. If the if=then condition is set to "false", then the

' environment variables must be set properly otherwise a run-time error will occur.

'

If (false) Then

'The filename component of the output file. Do not include the extension.

tempName = "D:\C83Outputs\wottcattondxp\TestOutputs\911_1190657394280"

' Derive the name of the XML descriptor file

xFile = tempName & "_desc.xml"

' Derive the name of the new output file.

sFile = tempName & ".pdf"

End If

'

' ***** End override section for testing outside Cognos environment *****

sMsg = ""

sNewFileName = ""

'

' Read the XML descriptor file created by CRN/Cognos 8 to get the value of the

' value will be used as the new filename. If there is no value in the

' be created. It is assumed that the value of the

' reserved characters the underlying OS uses for filename.

'

xmlDoc.async = False

xmlDoc.Load xFile

If (xmlDoc.parseError.errorCode <> 0) Then

sMsg = xFile & " : Error parsing XML " + xmlDoc.parseError.reason

Else

Set rootXML = xmlDoc.documentElement

Set oNodeList = rootXML.childNodes

iNode = 0

For Each Item in oNodeList

If (xmlDoc.documentElement.childNodes.Item(iNode).basename = "burstKey") Then

sNewFileName = xmlDoc.documentElement.childNodes.Item(iNode).text

If (sNewFileName = "") Then

sMsg = xFile & " :

Else

sMsg = xFile & " : Burst key value is '" & sNewFileName & "'"

End If

' This if condition was added to support the use of CAMID for the burst key

if (instr(sNewFilename, "CAMID")) Then

intFirstPosition = instr(sNewFilename, ":")

intSecondPosition = instr(intFirstPosition, sNewFilename, ")")

sNewFileName = mid (sNewFilename, intFirstPosition + 1, intSecondPosition - (intFirstPosition + 2))

sMsg = xFile + " : Value extracted from CAMID is '" & sNewFileName & "'"

End If

End If

iNode = iNode + 1

Next

Set oNodeList = Nothing

Set rootXML = Nothing

End If

'

' If there is a new filename, make of copy of it otherwise just log an error. A local log file with a name

' that is derived using the filename given to the output by CRN/Cognos 8 will be created so it is easy

' to determine if the file copied successfully or not. Since this information will also be written to the

' persistent log file, writing to the local log file can be bypassed if writing it creates too much clutter in the

' location specified in CM.OUTPUTLOCATION.

'

if (sNewFileName <> "") Then

sNewFileName = getPath(sFile) & sNewFileName & ".pdf"

writeLocalLogFile sFile, sMsg & vbCrLf & "Copying file named '" & sFile & "' to '" & sNewFileName & "'" & vbCrLf

objFSO.CopyFile sFile, sNewFileName

Else

' Set this variable to an error message so it will be picked up below when writing to the persistent log file.....

sNewFileName = "

writeLocalLogFile sFile, sMsg & vbCrLf

End If

'

' Update the persistent log file with the result. This log file will have data appended to it in order to keep the history.

'

sMsg = "----- Start of entry -----" & vbCrLf

sMsg = sMsg & "Date : " & date & vbTab & "Time : " & time & vbCrLf

sMsg = sMsg & vbCrLf & "Original Name :" & sFile & vbCrLf

sMsg = sMsg & "New Name : " & sNewFileName & vbCrLf

sMsg = sMsg & "----- End of entry -----" & vbCrLf & vbCrLf

sPersistLogFile = getPath(sFile) & "crn_pdf_rename.txt"

writePersistLogFile sPersistLogFile, sMsg

'

' All done.. Release references to the objects used in this app.

'

Set objFSO = Nothing

Set xmlDoc = Nothing

Set WshShell = Nothing

Set WshSysEnv = Nothing

Function getPath(sFileName)

sPathOnly = ""

lastSlashPos = InStrRev(sFileName, "\")

if (lastSlashPos > 0) Then

getPath = mid (sFileName, 1, lastSlashPos)

End If

End Function

'

' writeLocalLogFile

' Create a log file using a name derived from the filename that was generated by

' CRN/C8 before it was written to the location specified by CM.OUTPUTLOCATION.

'

Sub writeLocalLogFile(sFileName, sData)

sLogFile = left(sFileName, instr(sFileName,".")-1) + "_log.txt"

writeLogFile sLogFile, sData

End Sub

'

' writePersistLogFile

' Write a record to the persistent log file

'

Sub writePersistLogFile(sFileName, sData)

writeLogFile sFileName, sData

End Sub

'

' writeLogFile

' Generic routine to open a file, append a record to it and close the file

'

Sub writeLogFile(sFileName, sData)

If (objFSO.FileExists(sFileName)) Then

Set logFile = objFSO.GetFile(sFileName).OpenAsTextStream(8)

Else

Set logFile = objFSO.CreateTextFile(sFileName)

End If

logFile.Write sData

logFile.Close

Set logFile = Nothing

End Sub

now follow the 6th step again and you will get proper pdf output with name same as burst key.

Vishy

Friday, April 6, 2012

Auto Refresh of HTML output

Put this code into an HTML item and place that at the bottom part of the report, HTML output will refresh every 5 mins. |

Thursday, April 5, 2012

#prompt# macro

We use #prompt(...)# macro function in the FM so that few things can be dynamically decided. But #prompt # works with one value only I mean you get a text box prompt when you run a report using #prompt # macro.

but if you want that user should be able to see multiple values so that he can select one of values rather then typing in the text box prompt.

It will also help developer as there is no chance user making mistake by typing wrong value.

To do this just create any prompt at the report studio level with the same name that you have given to prompt in the #prompt(..)#.

Report studio prompt will override the FM prompt and values you select will be taken as #macro # prompt value only. This way you will be able to do that.

Thanks

Vishwas

but if you want that user should be able to see multiple values so that he can select one of values rather then typing in the text box prompt.

It will also help developer as there is no chance user making mistake by typing wrong value.

To do this just create any prompt at the report studio level with the same name that you have given to prompt in the #prompt(..)#.

Report studio prompt will override the FM prompt and values you select will be taken as #macro # prompt value only. This way you will be able to do that.

Thanks

Vishwas

Wednesday, April 4, 2012

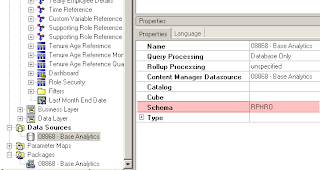

Cognos cube fast build

while building cube two things that can make cube creation fast is i) making datasource which is bringing fact ..UNCHECK GENERATE CATEGORIES.Only dimensionsal source should populate categories and not the fact source. ii) If last level in dimensions which is defined unique using the surrogate key then make sure that you remove - VERIFY UNIQUNESS selection for that dimension. |

Report failing after cube rebuild

while building transformer model we always define the unique id and label but most of the time we forget to define the category id's which should not be left for transformer to decide while building the cube. Category id should come from the dimension( database) itself. It will help when cube is rebuild due to any xyz issue as all your exisiting cube based report will keep on working otherwise your exisitng report will start throwing the error as it will search for cognos defined category id's in the cube which is not there anymore due to rebuild of the cube. So always define three minimum things for a level in a dimension id,lable and category id so that life is easy. |

IQD not getting created via Cognos FM

| if for query subject corressponding iqds are not getting created then make sure that in package definition ..SELECT COMPONENTS AND CHILDRENs is selected for namespace in which iqd query subjects are located. |

short cuts v/s alias

| Short cuts -- should be used when you need less maintainance as well as when you have star schema grouping and you need one of the query subject to behave as confirmed dimension. Alias -- mainly used when you want a role playing dimension. |

Joins at which layer ??

1) Where to define joins in FM layers, few says at database layer and few says at business layer. Let us see where to do what - a) data layer - making join at the data base layer makes more sense when you are modelling a relational data so that you only get those tables in the query which you really are using. This is called minimized query. b) business layer - if you define joins here or I say if you don't define joins in the data layer then your data layer is free, on other layers you can redifne joins when need arise. |

Saturday, March 31, 2012

Time Dimension Design from Cognos Perspective

| Cognos do provide tecgniques like determinants at FM level to avoid double counting, but we cognos guys should make sure we don't reach to that level if possible. The best is to flatten the hierarchy, most of the time we face this issue in time dimension but if we can flatten by not having surrogate key for month level and only have it for day level, we will be good. Directly join month level fact with this day level surrogate key... |

Cognos FM best practices

We all know that implementing best practices at FM is very important as all reports/cubes/metric designer are dependent on it.

We may not follow best practice at report studio or analysis studio level as these things don't have any other objects which are dependent on them.

But at cube and at FM and at metric designer it is imperative to follow best practice.

Let us discuss PRACTICALY best practice at FM level.

Create following layers

Data layer - vanilla database table objects , no joins at this layer. Define the query items property here so that following layers can inherit that.

Business layer - crate joins and determinants and give business names to query items.

Dimension leyer - if you are doing a DMR.

Presentation layer or short cut layer - better you call it a short cut layer so that you know you have to use short cut here , you might want crate star schema groupings here if required.

If there is a confirmed dimension that lies in 2 star schema grouping better you make join again at presentation layer b/w short cuts so that cognos can do stitching .

Thank You,

Vishwas Gupta

We may not follow best practice at report studio or analysis studio level as these things don't have any other objects which are dependent on them.

But at cube and at FM and at metric designer it is imperative to follow best practice.

Let us discuss PRACTICALY best practice at FM level.

Create following layers

Data layer - vanilla database table objects , no joins at this layer. Define the query items property here so that following layers can inherit that.

Business layer - crate joins and determinants and give business names to query items.

Dimension leyer - if you are doing a DMR.

Presentation layer or short cut layer - better you call it a short cut layer so that you know you have to use short cut here , you might want crate star schema groupings here if required.

If there is a confirmed dimension that lies in 2 star schema grouping better you make join again at presentation layer b/w short cuts so that cognos can do stitching .

Thank You,

Vishwas Gupta

Stitched query, star schema grouping and cognos FM

Many a times instead of using cognos own automatic functionalities , seen people using star schema groupings.

Start schema groupings are mainly used when you want to present clean picture of query subjects to the user so that they don't mix business subjects in the report.

It should not be used when you are having multiple facts in design as multiple facts will be taken care automatically when cardenilities are set 1-n between facts and dimensions. FM will automatically resolve multiple paths via stitched queries.

Thank You,

Vishwas Gupta

Start schema groupings are mainly used when you want to present clean picture of query subjects to the user so that they don't mix business subjects in the report.

It should not be used when you are having multiple facts in design as multiple facts will be taken care automatically when cardenilities are set 1-n between facts and dimensions. FM will automatically resolve multiple paths via stitched queries.

Thank You,

Vishwas Gupta

Determinants and FM layers

Determinants in framework manager has to be defined in the layer where you have joins defined.

If you have defined joins at business layer and defined determinants at dimension layer then nothing will work !!

Thank You,

Vishwas Gupta

If you have defined joins at business layer and defined determinants at dimension layer then nothing will work !!

Thank You,

Vishwas Gupta

Wednesday, September 29, 2010

Cognos TM1 can replace Cognos Powerplay

We always thought the Congos Powerplay is the best tool available for OLAP analysis. But it is going to be changed now.

Cognos TM1 is much much better in OLAP analysis.

OLAP is actually a concept it has nothing to do with databases and queries all those technical things. It is just a concept which says that user should be able to do analysis on multi dimensional way and on pre-summarized data. Thats it.

Cognos Powerplay has lot of restriction like measure is bounded with the dimensions. Once you create a dimension you have to use the dimension in the same manner , you can not use the same dimension with few changes.Any changes you need in dimension you have to create entirry new dimension.

In Cognos TM1 all these constraints are not there. Measure is treated as dimensino and you can create subset of the dimension.

It is so flexible. Dont be suprised if in coming times you see Powerplay is replaced by Cognos TM1.

Yours

Vishwas

Friday, May 21, 2010

Cross Product Confusion

The following property that we have , it doesn't mean that we will have cross join only after making this property 'Allow'.

By default its value is 'Deny".

We can always have cross-joins between the queries or we can make some kind of arrangements between the qureries and have cross-join and get our job done. This property stands for stopping any kind of cross-join between DATABASE TABLES.

Thanks

Vishwas

Sunday, May 16, 2010

DRAFT:- FM Preparation

Following points must be taken into consideration before creating FM model

1) What kind of reports you have. Dimensional or Relational i.e are you going to have drillup/down on reports (dimensional) or you have simple reports(relational). You might have a case where few reports are dimensional and few are relational , in this case you have to decide which table is going dimensional and which table going relational.

2) What kind of filters your reports is going to have.Are there common filters.?If there are common filters then you should create them in report itself.

3) Do you have any report requiring 2 facts join. If you have then you have to test you FM for that to make sure you FM is creating stitched query or outerjoin query. If FM is not creating stitched query then you will not be able to get the right kind of results. If ther is lot of confusion among different tables joins then make separte start schema grouping for that particular report requirments so that when those 2 fact table are taken in the query, no other table affect that.

4) Ask client if he has plan to use model in query studio. If he says "YES" then , : ) your job is doubled. You have to make sure from the beginning that your model is satisfying needs of report authors(Report Studio) as well as Adhoc reports(Query Studio).If you can afford to make 2 model , one for query studio and one for Report Studio , do that if your model is big and complex.

5) All the joins should be defined at the Data Layer. Never do anything at the Data Layer other then making joins between the tables if they have not been imported from the database or not created by FM automatically while importing tables from the database.

6) Never get confused by different 'Layers' of the FM. Layers are nothing but they are like 'Folders' of your desktop. It all depends upon you , how you arrange your objects. Same requirement may be fulfilled by creating 10 layers or 2 layers. There is no fix rule.But FM is a bit complex and all your reports depend upon the FM so we create differnt layers for each thing that we do like creating business names, creating dimensions, short-cuts for report author presentation just to makre sure if somebody else takes over he is clear.

7) Please read carefully what is a namespace and what are other objects of FM. Namespace is very different thing then other objects. Also take care when you copy and paste objects.And take very much care while building new query subjects from below layer, be mindful of what are you doing. Becuase any error and your entire day will be gone.

8) You have to setup row level security at the FM level. And if model is going to be used in query studio as well you have to alter the defintion of all those facts so that if anything is used from those facts row level security is applied. But at the same time you have to also make sure it should not hamper the report studio reports. So to achieve that make sure prompt name ( ?xyz? ) is same in FM query subject(Fact) as well in report studio.

..to be continued...

1) What kind of reports you have. Dimensional or Relational i.e are you going to have drillup/down on reports (dimensional) or you have simple reports(relational). You might have a case where few reports are dimensional and few are relational , in this case you have to decide which table is going dimensional and which table going relational.

2) What kind of filters your reports is going to have.Are there common filters.?If there are common filters then you should create them in report itself.

3) Do you have any report requiring 2 facts join. If you have then you have to test you FM for that to make sure you FM is creating stitched query or outerjoin query. If FM is not creating stitched query then you will not be able to get the right kind of results. If ther is lot of confusion among different tables joins then make separte start schema grouping for that particular report requirments so that when those 2 fact table are taken in the query, no other table affect that.

4) Ask client if he has plan to use model in query studio. If he says "YES" then , : ) your job is doubled. You have to make sure from the beginning that your model is satisfying needs of report authors(Report Studio) as well as Adhoc reports(Query Studio).If you can afford to make 2 model , one for query studio and one for Report Studio , do that if your model is big and complex.

5) All the joins should be defined at the Data Layer. Never do anything at the Data Layer other then making joins between the tables if they have not been imported from the database or not created by FM automatically while importing tables from the database.

6) Never get confused by different 'Layers' of the FM. Layers are nothing but they are like 'Folders' of your desktop. It all depends upon you , how you arrange your objects. Same requirement may be fulfilled by creating 10 layers or 2 layers. There is no fix rule.But FM is a bit complex and all your reports depend upon the FM so we create differnt layers for each thing that we do like creating business names, creating dimensions, short-cuts for report author presentation just to makre sure if somebody else takes over he is clear.

7) Please read carefully what is a namespace and what are other objects of FM. Namespace is very different thing then other objects. Also take care when you copy and paste objects.And take very much care while building new query subjects from below layer, be mindful of what are you doing. Becuase any error and your entire day will be gone.

8) You have to setup row level security at the FM level. And if model is going to be used in query studio as well you have to alter the defintion of all those facts so that if anything is used from those facts row level security is applied. But at the same time you have to also make sure it should not hamper the report studio reports. So to achieve that make sure prompt name ( ?xyz? ) is same in FM query subject(Fact) as well in report studio.

..to be continued...

Friday, May 14, 2010

Conditional Layouts

I didn't know about conditonal layouts until somebody asked me about it. I was not able to answer him and that was frustrating.

I know we work in technology field and there is every possibility that your work may not match with others work even if you both are working in say Cognos. Your insights and knowledge may be different from others who work in Cognos.

I wanted to know about conditional layouts but didn't find anything on net. I had to dig in report studio itself and find what it is.

When I came to know what it is , first word I uttered was "ABSURD !!!" .This is nothing but rendering !!!! We do more complex things and sometime we miss simple and easy things ,it also depends upon what kind of requirements your clients is presenting.If you keep working in XYZ area in Cognos , even if you have 10 years of experince you won't be able to answer the questions related to ABC area asked by 1 year experienced person in ABC.

But thats what I also like about technology ,everybody is having something to teach other person !!!!!

So now come back to Conditional layouts. These are the report layouts based on some condition.

For example client may run report in HTML or Excel or PDF. But if client doesn't want to include some columns in PDF version and add few more column in Excel version that what would you do ??? This is where conditional layouts are used.

Thanks

Vishwas

Guessing Granularity ???

Understanding this term is easy. One liner like "Table can provide up to which level of detail is known as its granularity" can make anybody understand what is it. But its easiness stops here.

Why am I saying 'its easiness stops here" ?? if you want to get a report coming out of 2 tables with different granularity then you will find the reason.

If number of tables increasing in your query having different granularity then you are just heading for some tough times if you don't know how to handle granularity.

If you are just writing queries manually then you can handle granularity issues easily but when queries is being created by cognos FM then your result would be wrong if you have not handled granularity carefully. You would start getting double counting of fact data and all those kind of issues that you don't want to see.

When I am talking about granularity then I am talking about facts and not dimensions.If you have a requirement where you need to join 2 facts. How do you join 2 facts with altogether different granularities ?

If somebody thinks that he can directly join 2 facts on common keys then he is planning for disaster.This is not the best idea and no body suggest it. There is one more reason you should not do it,because you can not keep customizing your model dedicated to a requirment.Anything that we do in the model we should try that we are generalizing it for other future requirements as well.

Getting a report out of 2 facts with different granularity means helping 2 people talk having different mother tongue.So what we do here, we have to bring a person who knows both the mother tongues,right ? Yes that is what we will have to do with these 2 facts. We will have to bring a commong dimension between these 2 facts.We also say these common dimension, conformed dimension. I hope you got the idea how to join 2 facts.Thanks

Vishwas

Thursday, May 13, 2010

FM making you go MAD ???

I have created a prompt for query studio,this prompt is basically made for inforcing row level security. User can select using which role he wants to run report. For that you have put filters in the corresponding FACT table so that whenever something is dragged from the FACT table and query studio report is run.. user is asked to select the ROLE.

But when you run the report , you see all the roles of ROLE TABLE are coming in the report and not those roles that are assigned to you.

ID | Role |

John | Manager |

John | Executive |

David | Analyst |

So when John is running report , he is saying all the roles in the prompt , Manager, Executive and Analyst. But this is wrong.

But why this is happening ???

So to correct this you go and put this filter exclusively in the ROLE TABLE query subject, you republish the package. But still you get the same kind of result. You would go made surely.. becuase the same thing happened with me :(

You do one thing , you go one layer down and put the same filter in ROLE TABLE query subject. Re-publish the package and now run the report. Now you would see only Manager and Executive roles appearing for John.

Why Why Why ??? I also don't know .. This is weird!! This is absurd !!

Only thing I can guess is upper layer was inforcing the row level security so below layer should bring all the things which are required

Thanks

Vishwas

Why the HELL my DRILL is not Working ??

Drill up and drill down works, but in your case if it is not working then check following things.

1) Data Menu > Drill Behaviour > Enable Drill up and Down check box is checked !!!!

2) You have not played with the dataitem on which you have to drill because DRILLING is very sensitive and it is totally handled by Framework Manager.If you do something in that dataitem like doing some calculation or using CASE_WHEN , drilling behaviour will be gone.

Even after having above 2 points ok,still the DRILL is not working ???

That means your query is very complex and Cognos is forgetting that it has to drill on that particular dataitem.

Do one thing go in the query and create a Member Set on that data item and define advanced drill behaviour on that dataitem so as to make cognos remember its drill property after the report is run.

Thanks

Vishwas

Preparing FM

Following points must be taken into consideration before creating FM model

1) What kind of reports you have. Dimensional or Relational i.e are you going to have drillup/down on reports (dimensional) or you have simple reports(relational). You might have a case where few reports are dimensional and few are relational , in this case you have to decide which table is going dimensional and which table going relational.

2) What kind of filters your reports is going to have.Are there common filters.?If there are common filters then you should create them in report itself.

3) Do you have any report requiring 2 facts join ? Getting the answer of this question if very importatnt.

4) Ask client if he has plan to use model in query studio. If he says "YES" then , : ) your job is doubled. You have to make sure from the beginning that your model is satisfying needs of report authors(Report Studio) as well as Adhoc reports(Query Studio).If you can afford to make 2 model , one for query studio and one for Report Studio , do that if your model is big and complex.

4) Ask client if he has plan to use model in query studio. If he says "YES" then , : ) your job is doubled. You have to make sure from the beginning that your model is satisfying needs of report authors(Report Studio) as well as Adhoc reports(Query Studio).If you can afford to make 2 model , one for query studio and one for Report Studio , do that if your model is big and complex.

5) All the joins should be defined at the Data Layer. Never do anything at the Data Layer other then making joins between the tables if they have not been imported from the database or not created by FM automatically while importing tables from the database.

6) Please read carefully what is a namespace and what are other objects of FM. Namespace is very different thing then other objects. Also take care when you copy and paste objects.And take very much care while building new query subjects from below layer, be mindful of what are you doing. Becuase any error and your entire day will be gone.

8) You have to setup row level security at the FM level. And if model is going to be used in query studio as well you have to alter the defintion of all those facts so that if anything is used from those facts row level security is applied. But at the same time you have to also make sure it should not hamper the report studio reports. So to achieve that make sure prompt name ( ?xyz? ) is same in FM query subject(Fact) as well in report studio.

..to be continued...

Wednesday, May 5, 2010

Calculated Measures

Cognos developers are sometimes confused about calculated measures. The one thing they understand is that, it is called a calculated measure because we put somekind of calculation into it. There is one more meaning of the calculated measure which helps in using it in more better manner,Calculated measure calculates itself depending upon where it is placed in Lists/Crosstabs. You can say Calculated Measure is more dynamic in nature than other measures. This definition gave me clear understanding of it and helped me use it in better way in reports. I hope it helps you as well. |

Tuesday, May 4, 2010

Disable drill down at particular level

You want to allow drill up on that level you need to do following

goto

1) Data - Drill Behaviour - select that particular level, both in drill up and drill down section.

Goto Advanced tab and for that particular level ; Define drill up behaviour as "Depth based Expression" and

Drill down behaviour as "Preserve".

This will enable drill up and disable drill down for that particular level.

2) Data - Drill Behaviour - Select that particular level and set the depth based expression to for example 2, that column or hierarchy will drill only till level 2.

1) Data - Drill Behaviour - select that particular level, both in drill up and drill down section.

Goto Advanced tab and for that particular level ; Define drill up behaviour as "Depth based Expression" and

Drill down behaviour as "Preserve".

This will enable drill up and disable drill down for that particular level.

2) Data - Drill Behaviour - Select that particular level and set the depth based expression to for example 2, that column or hierarchy will drill only till level 2.

------------------------------------

Tuesday, January 12, 2010

Bridge table

An example of a Bridge table would be relationship table between student_master and courses_master. A Student may participate in multiple courses and obviously in a course there will be multiple students. In this scenario to maintain relationships between student_master and courses_master we need another table which may be called Student_courses. This table will have all relationships between Students and Courses.A bridge table is a table that only contains the keys between the two tables in a many-to-many relationship. These tables typically consist of mostly key fields the primary keys of the two tables needing the many to many relationships. Sometimes there are more data elements but often there are only two columns both foreign keys.

A table which maintained to define the relationship between two different tables is known as bridge tables. For example say there are two tables one table with employee information (an employee table) and other with deparment details. Now a bridge table coming to picture to maintain the relationship between an employee with his/her department. which contains informations like which employee belongs to which department. Here is a example to understand More precisely ,this solution will help when one employee is working for different departments at the same point of time.

Employee table--------------------

EMP_CD EMP_name DOJ

1001 MR.X 12-06-2006

1002 Ms.Y 24-11-2004

1003 MS.K 25-02-2004

Department table -----------------------

DEPT_CD DEPT_NAME SECTOR_CD MANAGER_NM

IT_DEV S/W Developer SDV MR.XL

MNGNT HR Management HRD MS.DS

EMP_DEPT (Bridge Table)---------------------------------

EMP_CD DEPT_CD

1001 MNGNT

1002 IT_DEV

1003 IT_DEV

A table which maintained to define the relationship between two different tables is known as bridge tables. For example say there are two tables one table with employee information (an employee table) and other with deparment details. Now a bridge table coming to picture to maintain the relationship between an employee with his/her department. which contains informations like which employee belongs to which department. Here is a example to understand More precisely ,this solution will help when one employee is working for different departments at the same point of time.

Employee table--------------------

EMP_CD EMP_name DOJ

1001 MR.X 12-06-2006

1002 Ms.Y 24-11-2004

1003 MS.K 25-02-2004

Department table -----------------------

DEPT_CD DEPT_NAME SECTOR_CD MANAGER_NM

IT_DEV S/W Developer SDV MR.XL

MNGNT HR Management HRD MS.DS

EMP_DEPT (Bridge Table)---------------------------------

EMP_CD DEPT_CD

1001 MNGNT

1002 IT_DEV

1003 IT_DEV

Create Data Source and Data Source Connections

Create Data Source and Data Source Connections

The DB2 database name that you type must use uppercase letters. Also, in Framework Manager, the schema names that you type for the DB2 data sources must use uppercase letters.

The DB2 database name that you type must use uppercase letters. Also, in Framework Manager, the schema names that you type for the DB2 data sources must use uppercase letters.

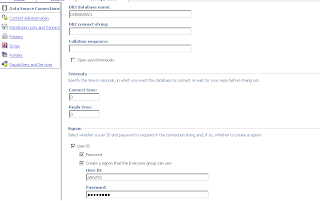

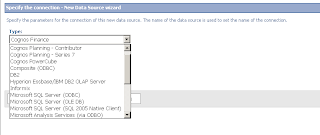

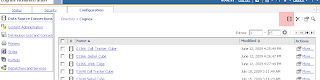

Here we are creating a Data Source now -

1) Clicked on pink color icon

2) A screen comes, give datasource name and clik next , following screen appears

3) Select the database that you want to connect to and click next , following screen will appear.

You just need to give database name and ID and Password then you can test the connection.

Connection string will get automatically build by the cognos.

Here you can click on finish button and your datasouce is ready to be used in FM/Report Studio.

Framework Manager Determinants

Let us understand the determinants in simplest terms.

Company CEO Empl

-----------------------------------------------------

Ennov Vijay Vishwas

Ennov Vijay Mritunjay

Ennov Vijay Farrukh

Company Revenue

---------------------------------------

Ennov 500000

Carrot 400000

When above 2 tables are joined on Company field and you bring following items in the query Company,CEO, Revenue. The the following will be the output

Company CEO Revenue

-------------------------------------------------

Ennov Vijay 500000

Ennov Vijay 500000

Ennov Vijay 500000

You got three rows because Ennova had three employees, here cognos was not able to understand that granulirity of both these tables was different. You have to defined it explicitely and end product of this is called DETERMINANTS.

Here in the first table you have to define 2 determinants CEO and Company and and check the check box of group by. Now when you run the query in cognos you will find that repeation is not there.

Thanks

Vishwas

Company CEO Empl

-----------------------------------------------------

Ennov Vijay Vishwas

Ennov Vijay Mritunjay

Ennov Vijay Farrukh

Company Revenue

---------------------------------------

Ennov 500000

Carrot 400000

When above 2 tables are joined on Company field and you bring following items in the query Company,CEO, Revenue. The the following will be the output

Company CEO Revenue

-------------------------------------------------

Ennov Vijay 500000

Ennov Vijay 500000

Ennov Vijay 500000

You got three rows because Ennova had three employees, here cognos was not able to understand that granulirity of both these tables was different. You have to defined it explicitely and end product of this is called DETERMINANTS.

Here in the first table you have to define 2 determinants CEO and Company and and check the check box of group by. Now when you run the query in cognos you will find that repeation is not there.

Thanks

Vishwas

Monday, January 4, 2010

Drill Level Number

You can find the level numbers dynamically.This is not something that is there in documentations

and even you if ask Cognos about it they would reply that this is not possible dynamically.

But this is very much much possible. You will have to apply your thinking and think in terms of what happens when you click on drill down link, you will get the answer.

Thanks

Vishwas

and even you if ask Cognos about it they would reply that this is not possible dynamically.

But this is very much much possible. You will have to apply your thinking and think in terms of what happens when you click on drill down link, you will get the answer.

Thanks

Vishwas

Friday, January 1, 2010

PI

Performance improvement Ideas for a Report.

1) Only those data items which are required.

2) use a single query for crosstabs and make crosstabs have similar dataitems placed, it will help cognos generate a single query.

3) Use case when condition in filter rather then in dataitem and then use that dataitem in the filter.

4) use FM for fitering data

5) do not use BETWEEN

6) If you are doing inner join b/w 2 quries then better write your own quries.

6) If you are doing inner join b/w 2 quries then better write your own quries.

7) make sure Minimized sql is created by the FM and proper joins are there.

8) Sometime you create a single query and use it in second query. In second qeury you put a filter on the first query. This is will definately slow the whole process. Instead of putting a filter on second query , do something a put a fitler in first query itself so that limite data is provided to second query.

9)have the indexes placed on the dataitmes you have filter on.

10)Formatting funtions also takes time.

11) Dont do time based calculations on run time.

Thursday, December 31, 2009

DataSource Connection

DataSouce can be defined at 2 places.

a) Cognos Connection

b) Framework Manager

It actually has 2 parts as well. Datasource which is kind of physical connection to the database and second is the datasouce connection which contains the credentials to conenct to the database.

If you see any datasource at the cognos connection,you can actually click on it and you will find the datasource connection(s) under it.You may have multiple datasouce connection for a datasource. When user will run report, he will be prompted to select which datasource connection he wants to use for report.Signon is kind of reusable thing,while defining datasouce connection,you can say that make it signon as well, if you do that then any other person can also use that signon and create many more datasources.He would just need to copy and paste singon from one place to other place(under the new datasource). This work perfactly when you have single ID/PSWD for multiple databases, you won't need that administrator( person knowing ID/PSWD) to enter ID/PSWD for you again and again for each databases.

At Framework manager you can not just right click the Data sources Folder and create a new datasources.You will need to run MetaSata Wizard to get to that place from where either you can bring a existing datasource into your model or create a new datasouce.You just click on namespace and then goto to ACTION menu and click MetaData Wizard. From here procedure is same as you create a datasource from Cognos Connection.

To be continued.......

a) Cognos Connection

b) Framework Manager

It actually has 2 parts as well. Datasource which is kind of physical connection to the database and second is the datasouce connection which contains the credentials to conenct to the database.

If you see any datasource at the cognos connection,you can actually click on it and you will find the datasource connection(s) under it.You may have multiple datasouce connection for a datasource. When user will run report, he will be prompted to select which datasource connection he wants to use for report.Signon is kind of reusable thing,while defining datasouce connection,you can say that make it signon as well, if you do that then any other person can also use that signon and create many more datasources.He would just need to copy and paste singon from one place to other place(under the new datasource). This work perfactly when you have single ID/PSWD for multiple databases, you won't need that administrator( person knowing ID/PSWD) to enter ID/PSWD for you again and again for each databases.

At Framework manager you can not just right click the Data sources Folder and create a new datasources.You will need to run MetaSata Wizard to get to that place from where either you can bring a existing datasource into your model or create a new datasouce.You just click on namespace and then goto to ACTION menu and click MetaData Wizard. From here procedure is same as you create a datasource from Cognos Connection.

To be continued.......

Degenerated,Confirmed and Junk Dimension

Degenerated Dimensions- In simple terms this is actualy is not a dimension because in dimension modelling we do not have a separate dimension for this. Its bit confusing right? Let us understand it in more detail - We have seen in lof of examples of degenerate dimension on web where we get example of Invoice number,Line number in a bill. We will take one most simple example. We have a fact table where in we store the results of students, I also need rank of students in the fact table and I do not want rank to appear as 1,2,3 but I want rank to appear as FIRST ,SECOND ,THIRD. Now here one thing you can do is to add 1 column that will store values like 1,2,3 and create a dimension rank dimension and store following values there

Rank Rank Descr

-----------------

1 First

2 Second

3 Third

Now you can connect that fact table with Rank Dimension ( just think this example objectively, because there are lot of ways to handle this at report level) to get the Rank Descr.

But if you see we can avoid this join if we can directly put FIRST ,SECOND,THIRD values in fact itself becuase we don't have anything thing stored in rank dimension. So this way a dimension will be killed and will become a part of a fact table. So this particular FIRST,SECOND,THIRD part will be called DEGENERATED DEMENSION in the fact table.

Confirmed Dimesnion - We have seen lot of definitions of Conformed dimensions, here we will see what is it through Cognos eye. Whenever we create a model using framework manager,it is not necessary that one star schema would satisfy your needs, we might need more then one star schema groupings ( I will have separate blog on what is star schema grouping).Different star schemas uses different fact tables but they might use some dimensions which are common across different different star schemas. These common dimensions are called Conformed Dimensions, typically you can say Time Dimension is a conformed dimension.In a product related information model Product dimension may work like a confoemed dimension.

Junk Dimension - While doing modelling there are lot of things which do not classify as facts, so should we make dimensions out of them ? If we start doing this we will end up making lot of small small dimensions and will crowd our modeliing space.So we have to decide whether making separate dimension makes sense for something.Suppose there are 5 things we are not going to make separate dimension for, we will put all these details into one dimensions and call that dimension a junk dimension.Mainly junk dimesnion contains flags like how customer came to purchase something buy car or bike, he is living in rented home or having his own home,customer placed order via web,phone or by any other means etc. Now you can see by these details that theses kind of details do not deserve to have there own dimension.

Rank Rank Descr

-----------------

1 First

2 Second

3 Third

Now you can connect that fact table with Rank Dimension ( just think this example objectively, because there are lot of ways to handle this at report level) to get the Rank Descr.

But if you see we can avoid this join if we can directly put FIRST ,SECOND,THIRD values in fact itself becuase we don't have anything thing stored in rank dimension. So this way a dimension will be killed and will become a part of a fact table. So this particular FIRST,SECOND,THIRD part will be called DEGENERATED DEMENSION in the fact table.

Confirmed Dimesnion - We have seen lot of definitions of Conformed dimensions, here we will see what is it through Cognos eye. Whenever we create a model using framework manager,it is not necessary that one star schema would satisfy your needs, we might need more then one star schema groupings ( I will have separate blog on what is star schema grouping).Different star schemas uses different fact tables but they might use some dimensions which are common across different different star schemas. These common dimensions are called Conformed Dimensions, typically you can say Time Dimension is a conformed dimension.In a product related information model Product dimension may work like a confoemed dimension.

Junk Dimension - While doing modelling there are lot of things which do not classify as facts, so should we make dimensions out of them ? If we start doing this we will end up making lot of small small dimensions and will crowd our modeliing space.So we have to decide whether making separate dimension makes sense for something.Suppose there are 5 things we are not going to make separate dimension for, we will put all these details into one dimensions and call that dimension a junk dimension.Mainly junk dimesnion contains flags like how customer came to purchase something buy car or bike, he is living in rented home or having his own home,customer placed order via web,phone or by any other means etc. Now you can see by these details that theses kind of details do not deserve to have there own dimension.

Bookmarks in Cognos

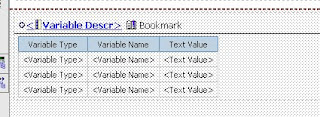

You can see here four columns have been added to the list , one column variable descr have been converted into a section. Unlock report and click Variable descr column and go to its properties

>Drill Through Definitions > Bookmark Tab

change SourceType value to 'DataItem Value' , Click OK.

Add a book mark just adjscant to variable descr and go to bookmarks properties.

Change source Type property to 'DataItem Value' and DataItemValue property to Varialbe Descr.

Now when you run this report this bookmark will have value of Variable descr value.

Suppose one of the value of Variable descr is 'India' , so now put a text item at the top of the report and go to its drill throug definition and go to bookmark tab and set sourcetype='text' and text=India, now you can run the report and get the output in pdf. In pdf when you click on that text item , it will directly take you to the palce where variable descr is having its value equal to India.

Subscribe to:

Comments (Atom)